In the modern world, there is no way without data storage. And the history of working with databases began a very long time ago, with the advent of JDBC. I propose to remember something that no modern framework built on top of JDBC can do without. In addition, even when working with them, at times you may need the opportunity to “return to your roots.” I hope this review will help as an introduction or help refresh your memory.

![JDBC or where it all begins - 1]()

![JDBC or where it all begins - 2]() This standard is described by the specification " JSR 221 JDBC 4.1 API ". This specification tells us that the JDBC API provides programmatic access to relational databases from programs written in Java. It also tells that the JDBC API is part of the Java platform and is therefore included in Java SE and Java EE. The JDBC API is provided in two packages: java.sql and javax.sql. Let's get to know them then.

This standard is described by the specification " JSR 221 JDBC 4.1 API ". This specification tells us that the JDBC API provides programmatic access to relational databases from programs written in Java. It also tells that the JDBC API is part of the Java platform and is therefore included in Java SE and Java EE. The JDBC API is provided in two packages: java.sql and javax.sql. Let's get to know them then.

![JDBC or where it all begins - 3]()

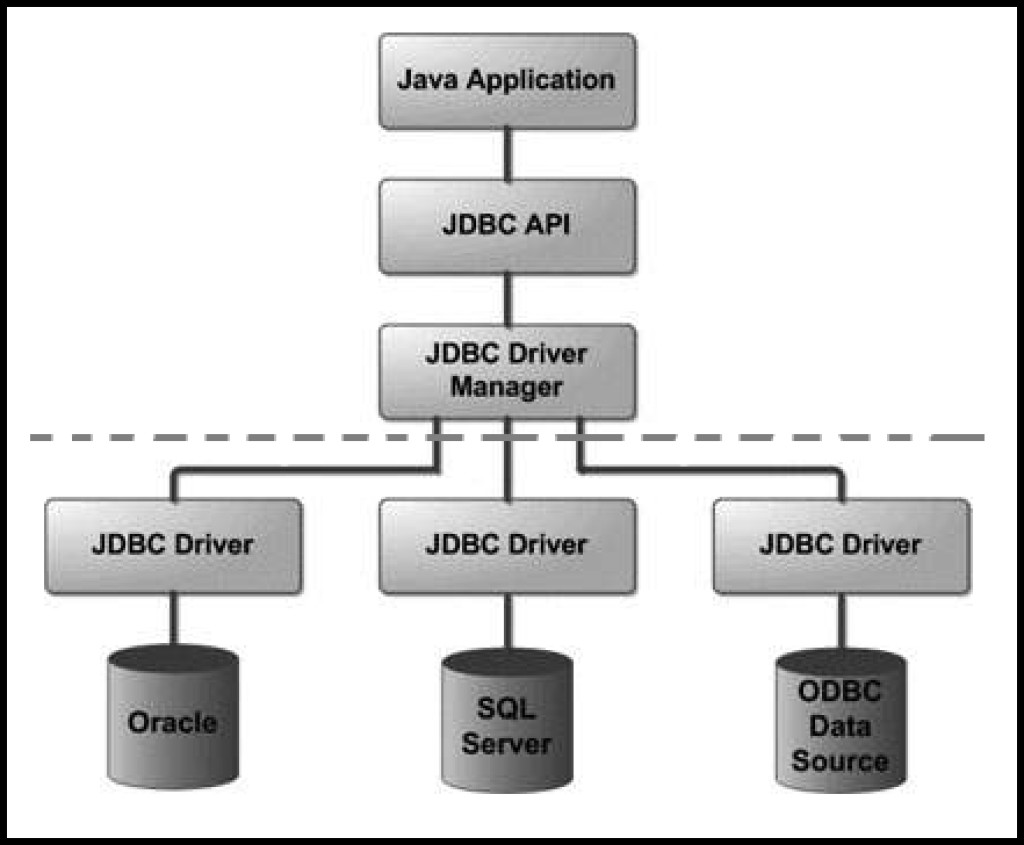

![JDBC or where it all begins - 4]() As we can see, and this is logical, the database is an external component that is not native to Java SE. This is explained simply - there are a huge number of databases and you can work with any one. For example, there is PostgreSQL, Oracle, MySQL, H2. Each of these databases is supplied by a separate company called database vendors. Each database is written in its own programming language (not necessarily Java). In order to be able to work with the database from a Java application, the database provider writes a special driver, which is its own image adapter. Such JDBC compatible ones (that is, those that have a JDBC driver) are also called “JDBC-Compliant Database”. Here we can draw an analogy with computer devices. For example, in a notepad there is a "Print" button. Every time you press it, the program tells the operating system that the notepad application wants to print. And you have a printer. To teach your operating system to communicate uniformly with a Canon or HP printer, you will need different drivers. But for you, as a user, nothing will change. You will still press the same button. Same with JDBC. You are running the same code, it's just that different databases may be running under the hood. I think this is a very clear approach. Each such JDBC driver is some kind of artifact, library, jar file. This is the dependency for our project. For example, we can select the database " H2 Database " and then we need to add a dependency like this:

As we can see, and this is logical, the database is an external component that is not native to Java SE. This is explained simply - there are a huge number of databases and you can work with any one. For example, there is PostgreSQL, Oracle, MySQL, H2. Each of these databases is supplied by a separate company called database vendors. Each database is written in its own programming language (not necessarily Java). In order to be able to work with the database from a Java application, the database provider writes a special driver, which is its own image adapter. Such JDBC compatible ones (that is, those that have a JDBC driver) are also called “JDBC-Compliant Database”. Here we can draw an analogy with computer devices. For example, in a notepad there is a "Print" button. Every time you press it, the program tells the operating system that the notepad application wants to print. And you have a printer. To teach your operating system to communicate uniformly with a Canon or HP printer, you will need different drivers. But for you, as a user, nothing will change. You will still press the same button. Same with JDBC. You are running the same code, it's just that different databases may be running under the hood. I think this is a very clear approach. Each such JDBC driver is some kind of artifact, library, jar file. This is the dependency for our project. For example, we can select the database " H2 Database " and then we need to add a dependency like this:

![JDBC or where it all begins - 5]()

![JDBC or where it all begins - 6]()

![JDBC or where it all begins - 7]()

![JDBC or where it all begins - 8]()

![JDBC or where it all begins - 9]()

![JDBC or where it all begins - 10]()

![JDBC or where it all begins - 11]()

![JDBC or where it all begins - 12]()

Introduction

One of the main purposes of a programming language is storing and processing information. To better understand how data storage works, it’s worth spending a little time on the theory and architecture of applications. For example, you can read the literature, namely the book " Software Architect's Handbook: Become a successful software architect by implementing effective arch... " by Joseph Ingeno. As said, there is a certain Data Tier or “Data Layer”. It includes a place to store data (for example, a SQL database) and tools for working with a data store (for example, JDBC, which will be discussed). There is also an article on the Microsoft website: “ Designing an infrastructure persistence layer ,” which describes the architectural solution of separating an additional layer from the Data Tier - the Persistence Layer. In this case, the Data Tier is the level of storage of the data itself, while the Persistence Layer is some level of abstraction for working with data from the storage from the Data Tier level. The Persistence Layer can include the "DAO" template or various ORMs. But ORM is a topic for another discussion. As you may have already understood, the Data Tier appeared first. Since the time of JDK 1.1, JDBC (Java DataBase Connectivity - connection to databases in Java) has appeared in the Java world. This is a standard for interaction of Java applications with various DBMSs, implemented in the form of java.sql and javax.sql packages included in Java SE:

Beginning of work

To understand what the JDBC API is in general, we need a Java application. It is most convenient to use one of the project assembly systems. For example, let's use Gradle . You can read more about Gradle in a short review: " A Brief Introduction to Gradle ". First, let's initialize a new Gradle project. Since Gradle functionality is implemented through plugins, we need to use “ Gradle Build Init Plugin ” for initialization:gradle init --type java-applicationdependencies {

// This dependency is found on compile classpath of this component and consumers.

implementation 'com.google.guava:guava:26.0-jre'

// Use JUnit test framework

testImplementation 'junit:junit:4.12'

}

dependencies {

implementation 'com.h2database:h2:1.4.197'

Connection

So, we have a JDBC driver, we have a JDBC API. As we remember, JDBC stands for Java DataBase Connectivity. Therefore, it all starts with Connectivity - the ability to establish a connection. And connection is Connection. Let's turn again to the text of the JDBC specification and look at the table of contents. In the chapter " CHAPTER 4 Overview " (overview) we turn to the section " 4.1 Establishing a Connection " (establishing a connection) it is said that there are two ways to connect to the database:- Via DriverManager

- Via DataSource

Connection con = DriverManager.getConnection(url, user, passwd);@Test. Unit tests are not the topic of this review, so we will simply limit ourselves to the understanding that these are methods described in a certain way, the purpose of which is to test something. According to the JDBC specification and the H2 website, we will check that we have received a connection to the database. Let's write a method for obtaining a connection:

private Connection getNewConnection() throws SQLException {

String url = "jdbc:h2:mem:test";

String user = "sa";

String passwd = "sa";

return DriverManager.getConnection(url, user, passwd);

}@Test

public void shouldGetJdbcConnection() throws SQLException {

try(Connection connection = getNewConnection()) {

assertTrue(connection.isValid(1));

assertFalse(connection.isClosed());

}

}private static Connection connection;@Before

public void init() throws SQLException {

connection = getNewConnection();

}

@After

public void close() throws SQLException {

connection.close();

}

Statements

Next we are interested in Statements or expressions. They are described in the documentation in the chapter " CHAPTER 13 Statements ". Firstly, it says that there are several types or types of statements:- Statement: SQL expression that contains no parameters

- PreparedStatement : Prepared SQL statement containing input parameters

- CallableStatement: SQL expression with the ability to obtain a return value from SQL Stored Procedures.

private int executeUpdate(String query) throws SQLException {

Statement statement = connection.createStatement();

// Для Insert, Update, Delete

int result = statement.executeUpdate(query);

return result;

}private void createCustomerTable() throws SQLException {

String customerTableQuery = "CREATE TABLE customers " +

"(id INTEGER PRIMARY KEY, name TEXT, age INTEGER)";

String customerEntryQuery = "INSERT INTO customers " +

"VALUES (73, 'Brian', 33)";

executeUpdate(customerTableQuery);

executeUpdate(customerEntryQuery);

}@Test

public void shouldCreateCustomerTable() throws SQLException {

createCustomerTable();

connection.createStatement().execute("SELECT * FROM customers");

}@Test

public void shouldSelectData() throws SQLException {

createCustomerTable();

String query = "SELECT * FROM customers WHERE name = ?";

PreparedStatement statement = connection.prepareStatement(query);

statement.setString(1, "Brian");

boolean hasResult = statement.execute();

assertTrue(hasResult);

}

ResultSet

The concept of a ResultSet is described in the JDBC API specification in the chapter "CHAPTER 15 Result Sets". First of all, it says that ResultSet provides methods for retrieving and manipulating the results of executed queries. That is, if the execute method returned true to us, then we can get a ResultSet. Let's move the call to the createCustomerTable() method into the init method, which is marked as @Before. Now let's finalize our shouldSelectData test:@Test

public void shouldSelectData() throws SQLException {

String query = "SELECT * FROM customers WHERE name = ?";

PreparedStatement statement = connection.prepareStatement(query);

statement.setString(1, "Brian");

boolean hasResult = statement.execute();

assertTrue(hasResult);

// Обработаем результат

ResultSet resultSet = statement.getResultSet();

resultSet.next();

int age = resultSet.getInt("age");

assertEquals(33, age);

}@Test

public void shouldInsertInResultSet() throws SQLException {

Statement statement = connection.createStatement(ResultSet.TYPE_SCROLL_SENSITIVE, ResultSet.CONCUR_UPDATABLE);

ResultSet resultSet = statement.executeQuery("SELECT * FROM customers");

resultSet.moveToInsertRow();

resultSet.updateLong("id", 3L);

resultSet.updateString("name", "John");

resultSet.updateInt("age", 18);

resultSet.insertRow();

resultSet.moveToCurrentRow();

}RowSet

In addition to ResultSet, JDBC introduces the concept of RowSet. You can read more here: " JDBC Basics: Using RowSet Objects ". There are various variations of use. For example, the simplest case might look like this:@Test

public void shouldUseRowSet() throws SQLException {

JdbcRowSet jdbcRs = new JdbcRowSetImpl(connection);

jdbcRs.setCommand("SELECT * FROM customers");

jdbcRs.execute();

jdbcRs.next();

String name = jdbcRs.getString("name");

assertEquals("Brian", name);

}CachedRowSet jdbcRsCached = new CachedRowSetImpl();

jdbcRsCached.acceptChanges(connection);

Metadata

In addition to queries, a connection to the database (i.e., an instance of the Connection class) provides access to metadata - data about how our database is configured and organized. But first, let’s mention a few key points: The URL for connecting to our database: “jdbc:h2:mem:test”. test is the name of our database. For the JDBC API, this is a directory. And the name will be in uppercase, that is, TEST. The default schema for H2 is PUBLIC. Now, let's write a test that shows all user tables. Why custom? Because databases contain not only user tables (those that we ourselves created using create table expressions), but also system tables. They are necessary to store system information about the structure of the database. Each database can store such system tables differently. For example, in H2 they are stored in the " INFORMATION_SCHEMA " schema. Interestingly, INFORMATION SCHEMA is a common approach, but Oracle went a different route. You can read more here: " INFORMATION_SCHEMA and Oracle ". Let's write a test that receives metadata on user tables:@Test

public void shoudGetMetadata() throws SQLException {

// У нас URL = "jdbc:h2:mem:test", где test - название БД

// Название БД = catalog

DatabaseMetaData metaData = connection.getMetaData();

ResultSet result = metaData.getTables("TEST", "PUBLIC", "%", null);

List<String> tables = new ArrayList<>();

while(result.next()) {

tables.add(result.getString(2) + "." + result.getString(3));

}

assertTrue(tables.contains("PUBLIC.CUSTOMERS"));

}

Connection pool

The connection pool in the JDBC specification has a section called "Chapter 11 Connection Pooling". It also provides the main justification for the need for a connection pool. Each Coonection is a physical connection to the database. Its creation and closing is quite an "expensive" job. JDBC only provides a connection pooling API. Therefore, the choice of implementation remains ours. For example, such implementations include HikariCP . Accordingly, we will need to add a pool to our project dependency:dependencies {

implementation 'com.h2database:h2:1.4.197'

implementation 'com.zaxxer:HikariCP:3.3.1'

testImplementation 'junit:junit:4.12'

}private DataSource getDatasource() {

HikariConfig config = new HikariConfig();

config.setUsername("sa");

config.setPassword("sa");

config.setJdbcUrl("jdbc:h2:mem:test");

DataSource ds = new HikariDataSource(config);

return ds;

}@Test

public void shouldGetConnectionFromDataSource() throws SQLException {

DataSource datasource = getDatasource();

try (Connection con = datasource.getConnection()) {

assertTrue(con.isValid(1));

}

}

Transactions

One of the most interesting things about JDBC is transactions. In the JDBC specification, they are assigned the chapter "CHAPTER 10 Transactions". First of all, it is worth understanding what a transaction is. A transaction is a group of logically combined sequential operations on data, processed or canceled as a whole. When does a transaction start when using JDBC? As the specification states, this is handled directly by the JDBC Driver. But usually, a new transaction begins when the current SQL statement requires a transaction and the transaction has not yet been created. When does the transaction end? This is controlled by the auto-commit attribute. If autocommit is enabled, the transaction will be completed after the SQL statement is "completed". What "done" means depends on the type of SQL expression:- Data Manipulation Language, also known as DML (Insert, Update, Delete)

The transaction is completed as soon as the action is completed Select Statements

- CallableStatement and expressions that return multiple results

When all associated ResultSets have been closed and all output has been received (including the number of updates)

The transaction is completed when the ResultSet is closed ( ResultSet#close )

@Test

public void shouldCommitTransaction() throws SQLException {

connection.setAutoCommit(false);

String query = "INSERT INTO customers VALUES (1, 'Max', 20)";

connection.createStatement().executeUpdate(query);

connection.commit();

Statement statement = connection.createStatement();

statement.execute("SELECT * FROM customers");

ResultSet resultSet = statement.getResultSet();

int count = 0;

while(resultSet.next()) {

count++;

}

assertEquals(2, count);

}

Insulation levels

Let's open the subsection "10.2 Transaction Isolation Levels" of the JDBC specification. Here, before moving further, I would like to remember about such a thing as ACID. ACID describes the requirements for a transactional system.- Atomicity:

No transaction will be partially committed to the system. Either all of its sub-operations will be performed, or none will be performed. - Consistency:

Each successful transaction, by definition, records only valid results. - Isolation:

While a transaction is running, concurrent transactions should not affect its outcome. - Durability:

If a transaction is successfully completed, the changes made to it will not be undone due to any failure.

ext {

h2Version = '1.3.176' // 1.4.177

hikariVersion = '3.3.1'

junitVersion = '4.12'

}dependencies {

implementation "com.h2database:h2:${h2Version}"

implementation "com.zaxxer:HikariCP:${hikariVersion}"

testImplementation "junit:junit:${junitVersion}"

}@Test

public void shouldGetReadUncommited() throws SQLException {

Connection first = getNewConnection();

assertTrue(first.getMetaData().supportsTransactionIsolationLevel(Connection.TRANSACTION_READ_UNCOMMITTED));

first.setTransactionIsolation(Connection.TRANSACTION_READ_UNCOMMITTED);

first.setAutoCommit(false);

// Транзакиця на подключение. Поэтому первая транзакция с ReadUncommited вносит изменения

String insertQuery = "INSERT INTO customers VALUES (5, 'Max', 15)";

first.createStatement().executeUpdate(insertQuery);

// Вторая транзакция пытается их увидеть

int rowCount = 0;

JdbcRowSet jdbcRs = new JdbcRowSetImpl(getNewConnection());

jdbcRs.setCommand("SELECT * FROM customers");

jdbcRs.execute();

while (jdbcRs.next()) {

rowCount++;

}

assertEquals(2, rowCount);

}

Bottom line

As we can see, JDBC is a powerful tool in the hands of Java for working with databases. I hope this short review will help give you a starting point or help refresh your memory. Well, for a snack, some additional materials:- Fire report: " Transactions: myths, surprises and opportunities " from Martin Kleppmann

- Yuri Tkach: " JPA. Transactions "

- Yurik Tkach: " JDBC - Java for testers "

- Free course on Udemy: " JDBC and MySQL "

- " Handling CallableStatement Objects "

- IBM Developer: " Java Database Connectivity "

- IBM Knowledge Center: " Getting started with JDBC "

GO TO FULL VERSION