The debate on the topic of artificial intelligence and its dangers/benefits for society began a long time ago. Many fantastic books and popular science articles have been written about AI and its impact on the world around us, many films have been made, and from time to time it is discussed in blogs and social networks. Today we bring to your attention an adapted translation

of an article by João Duarte , in which he shares his opinion on this topic.

At the beginning of November 2017, I came to the World Symposium: Artificial Intelligence and Inclusion, which was attended by over 200 people from all over the world. Personally, I was interested in two questions: (1) how to create AI solutions that could take into account social cultural values and (2) how to use AI in education. In the article, I want to share 5 thoughts that I took out for myself as a result of this event.

1. AI can cause harm (even if its purpose is completely different)

The development of AI brings real benefits in areas such as education, medicine, media, management, and so on. But at the same time, its use can be harmful and discriminatory.

Deviant AI behavior can be caused by a number of reasons:

- the use of biased, biased or low-quality data during training;

- incorrectly formulated rules;

- use out of context;

- creating feedback loops.

| Deviant behavior (also social deviation, deviant behavior) is a stable behavior of an individual that deviates from the generally accepted, most common and established social norms. |

Solid Bomb Gold was a t-shirt company that used AI to generate phrases to print on its products. One of the phrases that the algorithm created was “

Keep Calm and Rape a Lot ” (Keep calm and rape more). And such a seemingly insignificant AI mistake cost the company everything. This case was widely covered in the media and received appropriate responses. After such a scandal, the Amazon platform refused to cooperate with Solid Bomb Gold, and the company ceased to exist.

In fact, the algorithm, of course, did not imply the creation of such offensive content. He acted simply: he chose any verb from the list, and one day he hit the word “rape.” This is a great example of poor quality data and poorly defined rules.

Nikon added a feature to their cameras that alerted the photographer if someone blinked. But after a while, user reviews appeared about the incorrect response of the function when shooting people with a Mongoloid type of appearance. And although Nikon is a Japanese company, it seems that in its algorithms it did not take into account the structural features of the eyes of the inhabitants of the Asian region.

Remember the movie

"Minority Report" with Tom Cruise? In the picture, the machines predicted where and when the crime was about to happen, and the police could warn him. Something similar is happening now.

PredPol is using AI

for crime prediction to help police optimize patrol resources. The data the company used to train the model came from crime reports recorded by the police. But not all violations can be tracked and recorded. Crimes were recorded most often in those areas where there are the most patrols. This fact can loop the program, which sends patrols to already patrolled areas. Here again there was the use of biased data, as well as a feedback loop. So, even if the purpose of AI is quite different, there is a possibility that it can cause harm.

2. AI can learn to be fair (sometimes much better than humans)

It is not so easy to determine what is fair and what is not. This issue needs to be considered individually, taking into account the law, cultural aspects, assessment of values and different (reasonable) points of view. However, if we single out an unambiguous “good” or “evil” from the entire set of cases, artificial intelligence can learn from such examples. AI works by learning how to use variables to produce a target outcome (objective features). And we can improve AI fairness by using such evaluation data as objective functions, or as variable constraints. If we deliberately decide to teach AI the concept of justice, then we must consider that the system will use it more literally than people. Because AI is much more efficient than us at following rules. This is demonstrated by numerous examples. Yes, the Brazilian company

Desabafo Social ran a campaign showing examples of discrimination on

Shutterstock and

Google image search engines . One result is that if you search for "baby" on Shutterstock, it's likely that most of the photos will have white babies (

and if on Google, the Justin Bieber Baby video will be the first, approx. ed. ).

The search engine does not reflect the real state of affairs on Earth, but it can be taught to do so. If one of the goals is to "reflect the diversity of the world's population", AI can use a huge amount of data to guarantee such a representation based on racial, gender and cultural aspects. In such matters, machines are more efficient than people. In the considered example, the result of the work of AI is discriminatory, since it was trained that way. But we can always come up with better algorithms to avoid this.

3. AI is forcing us to rethink our ethical and moral principles.

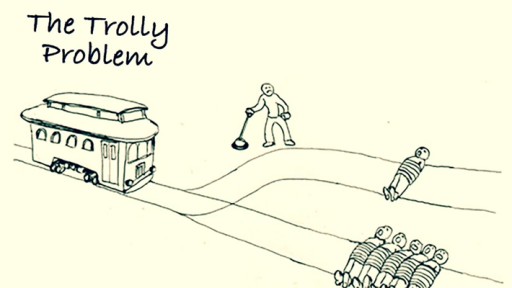

As we saw earlier, AI tries to optimize variables to achieve a specific goal. In the process of thinking about these goals, ethical and moral questions that were on the buzz some time ago, and now have regained their relevance, are constantly raised. But there are also new questions, whose emergence was provoked by the possibilities of AI. In the first class of a law course at Harvard, philosopher

Michael Sandel addresses a moral dilemma called the Trolley

problem , which was first raised in 1905. This task models a typical moral dilemma - is it possible to harm one or several people in order to save the lives of other people.

So, the situation: a heavy uncontrolled trolley rushes along the rails. On its way there are five people tied to the rails. Fortunately, you can switch the arrow - and then the cart will go in a different way, a siding. Unfortunately, there is one person on the siding, also tied to the rails. What are your actions? If you do nothing, the cart will kill five people. If you switch the arrow, then it will kill only one. A similar problem is faced by autonomous vehicle engineers who need to decide whether to prioritize the lives of people inside or outside the vehicle.

4. There is no concept of "AI neutrality"

The use of AI is affecting and changing our lives. For example, we use

Google Maps to find the shortest path and no longer need to ask people on the street. So we communicate less with people in an unfamiliar place or another city while traveling. Or consider the interaction of children with virtual assistants

Siri and

Alexa . This is a double-edged sword. On the one hand, children become more curious because they can ask about anything at any time of the day. On the other hand, parents note that children are becoming less polite.

Illustration by Bee Johnson for the Washington Post

After all, they no longer need to use the words “please” and “thank you”. And it's changing how kids learn to interact with the world.

|

“Algorithms are not neutral. If we change the algorithm, we will change reality.”

— Desabafo Social |

However, AI engineers can change this by programming assistants to respond with something like, "Hey, you need to use the magic word" when rudely treated. We have the ability to create intentions within AI and thus defend a certain point of view. So, although AI cannot be neutral, it can be a tool for shaping desirable behavior in society. And instead of striving for neutrality, we should strive for expediency.

5. AI Needs Diversity to Ensure Success

Algorithms of artificial intelligence will

always correspond to the point of view of their creators. The conclusion is that the best way to ensure the success of AI is to promote diversity in this area. AI engineers should be people of different nationalities, men and women, with different racial and cultural aspects. In this case, it will be possible to provide the required variety. For this to happen, it is necessary to increase access to AI education and guarantee access to this knowledge to different groups of people.

Kathleen Siminyu , Kenyan Data Scientist, Launches a Nairobi Chapter of

the Woman in Machine Learning and Data Science(Woman in Machine Learning and Data Science, WiMLDS). This project plays a vital role in increasing such diversity in the AI engineering community.

I hope that this article will be useful and will be useful to you. I believe that understanding the ethics of AI is the first step towards a more fair and inclusive AI.

![Artificial intelligence is really dangerous. But not at all what you think - 1]() At the beginning of November 2017, I came to the World Symposium: Artificial Intelligence and Inclusion, which was attended by over 200 people from all over the world. Personally, I was interested in two questions: (1) how to create AI solutions that could take into account social cultural values and (2) how to use AI in education. In the article, I want to share 5 thoughts that I took out for myself as a result of this event.

At the beginning of November 2017, I came to the World Symposium: Artificial Intelligence and Inclusion, which was attended by over 200 people from all over the world. Personally, I was interested in two questions: (1) how to create AI solutions that could take into account social cultural values and (2) how to use AI in education. In the article, I want to share 5 thoughts that I took out for myself as a result of this event.

![Artificial intelligence is really dangerous. But not at all what you think - 2]() In fact, the algorithm, of course, did not imply the creation of such offensive content. He acted simply: he chose any verb from the list, and one day he hit the word “rape.” This is a great example of poor quality data and poorly defined rules. Nikon added a feature to their cameras that alerted the photographer if someone blinked. But after a while, user reviews appeared about the incorrect response of the function when shooting people with a Mongoloid type of appearance. And although Nikon is a Japanese company, it seems that in its algorithms it did not take into account the structural features of the eyes of the inhabitants of the Asian region.

In fact, the algorithm, of course, did not imply the creation of such offensive content. He acted simply: he chose any verb from the list, and one day he hit the word “rape.” This is a great example of poor quality data and poorly defined rules. Nikon added a feature to their cameras that alerted the photographer if someone blinked. But after a while, user reviews appeared about the incorrect response of the function when shooting people with a Mongoloid type of appearance. And although Nikon is a Japanese company, it seems that in its algorithms it did not take into account the structural features of the eyes of the inhabitants of the Asian region.

![Artificial intelligence is really dangerous. But not at all what you think - 3]() Remember the movie "Minority Report" with Tom Cruise? In the picture, the machines predicted where and when the crime was about to happen, and the police could warn him. Something similar is happening now.

Remember the movie "Minority Report" with Tom Cruise? In the picture, the machines predicted where and when the crime was about to happen, and the police could warn him. Something similar is happening now.

![Artificial intelligence is really dangerous. But not at all what you think - 4]() PredPol is using AI for crime prediction to help police optimize patrol resources. The data the company used to train the model came from crime reports recorded by the police. But not all violations can be tracked and recorded. Crimes were recorded most often in those areas where there are the most patrols. This fact can loop the program, which sends patrols to already patrolled areas. Here again there was the use of biased data, as well as a feedback loop. So, even if the purpose of AI is quite different, there is a possibility that it can cause harm.

PredPol is using AI for crime prediction to help police optimize patrol resources. The data the company used to train the model came from crime reports recorded by the police. But not all violations can be tracked and recorded. Crimes were recorded most often in those areas where there are the most patrols. This fact can loop the program, which sends patrols to already patrolled areas. Here again there was the use of biased data, as well as a feedback loop. So, even if the purpose of AI is quite different, there is a possibility that it can cause harm.

![Artificial intelligence is really dangerous. But not at all what you think - 5]() The search engine does not reflect the real state of affairs on Earth, but it can be taught to do so. If one of the goals is to "reflect the diversity of the world's population", AI can use a huge amount of data to guarantee such a representation based on racial, gender and cultural aspects. In such matters, machines are more efficient than people. In the considered example, the result of the work of AI is discriminatory, since it was trained that way. But we can always come up with better algorithms to avoid this.

The search engine does not reflect the real state of affairs on Earth, but it can be taught to do so. If one of the goals is to "reflect the diversity of the world's population", AI can use a huge amount of data to guarantee such a representation based on racial, gender and cultural aspects. In such matters, machines are more efficient than people. In the considered example, the result of the work of AI is discriminatory, since it was trained that way. But we can always come up with better algorithms to avoid this.

![Artificial intelligence is really dangerous. But not at all what you think - 6]() So, the situation: a heavy uncontrolled trolley rushes along the rails. On its way there are five people tied to the rails. Fortunately, you can switch the arrow - and then the cart will go in a different way, a siding. Unfortunately, there is one person on the siding, also tied to the rails. What are your actions? If you do nothing, the cart will kill five people. If you switch the arrow, then it will kill only one. A similar problem is faced by autonomous vehicle engineers who need to decide whether to prioritize the lives of people inside or outside the vehicle.

So, the situation: a heavy uncontrolled trolley rushes along the rails. On its way there are five people tied to the rails. Fortunately, you can switch the arrow - and then the cart will go in a different way, a siding. Unfortunately, there is one person on the siding, also tied to the rails. What are your actions? If you do nothing, the cart will kill five people. If you switch the arrow, then it will kill only one. A similar problem is faced by autonomous vehicle engineers who need to decide whether to prioritize the lives of people inside or outside the vehicle.

![Artificial intelligence is really dangerous. But not at all what you think - 7]()

![Artificial intelligence is really dangerous. But not at all what you think - 8]() I hope that this article will be useful and will be useful to you. I believe that understanding the ethics of AI is the first step towards a more fair and inclusive AI.

I hope that this article will be useful and will be useful to you. I believe that understanding the ethics of AI is the first step towards a more fair and inclusive AI.

GO TO FULL VERSION